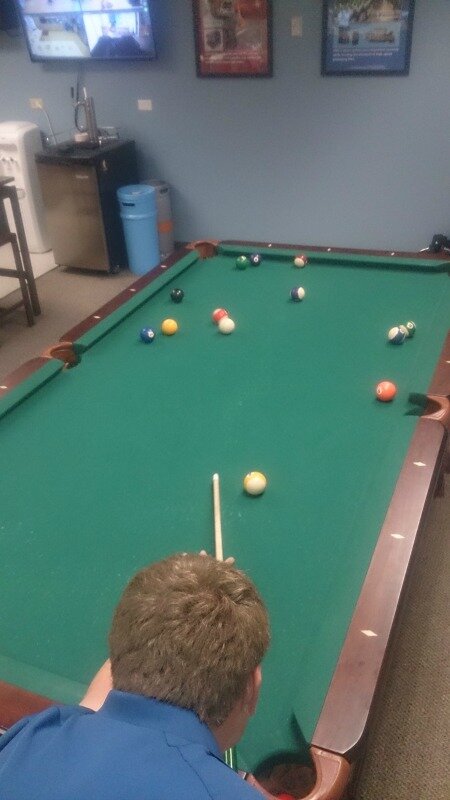

Every DMC office has at least one table sport at its heart. For Denver, this game is pool. So on FedEx Day 2016 Otto, Tyler, and I set out to automate our office Spencer Marston pool table. What does this look like you may ask? Our goal was to automatically detect what pool balls remain on the table. So during our 3-way, 5-way, or even 7-way cut throat games we don't have to worry about what balls are sunk and what balls are not.

Preparation was key for this project. We selected a camera, chose a software plan of attack, mounted the camera, played some pool to "test", and created a camera driver. The camera chosen was a basic webcam with a 90 degree field of view and 1080p resolution. This made for a wide enough image to capture the pool table felt and the rails. The chosen software was LabVIEW as DMC has experience using NI's versatile VAS and VDM software libraries with an integration to our existing custom Java based pool application. Once we wrote the driver we were able to start on algorithm development on FedEx Day.

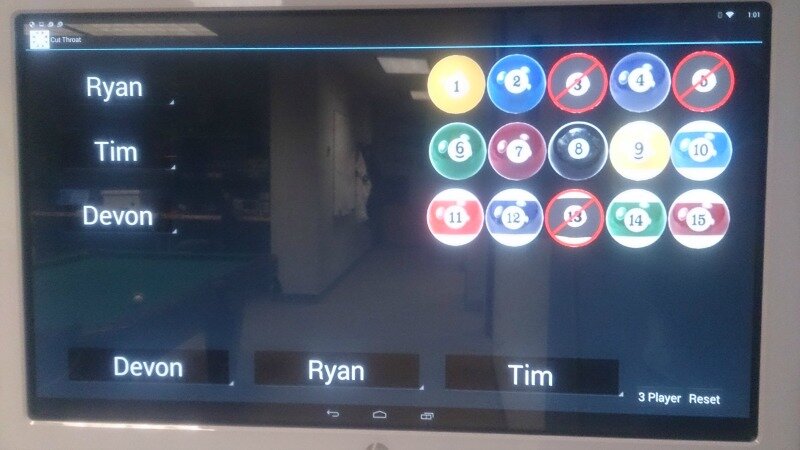

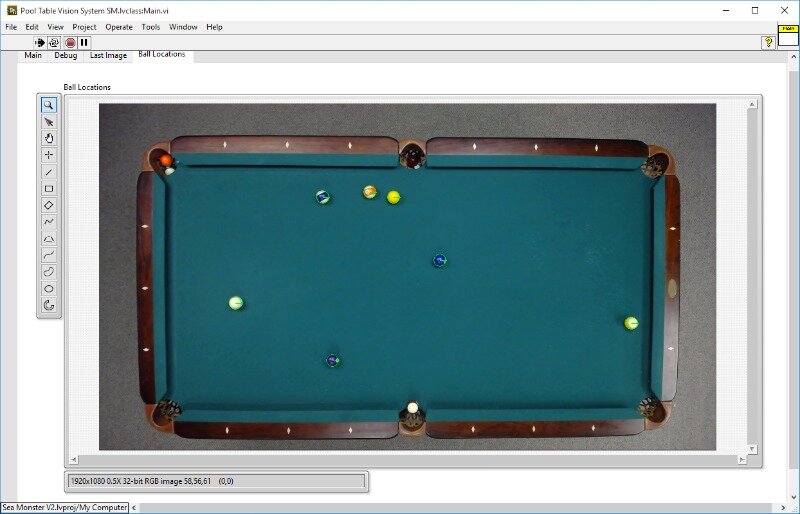

The following software was developed on FedEx Day: Software Architecture/Application, Calibration Algorithms, Ball Location Algorithm, Ball Color and Pattern Algorithm, "Active Shooter" Algorithm. The architecture utilized a Producer/Consumer architecture with a single state machine to collect an image and determine the state of the pool table. Each ball was calibrated by snapping an image of the ball to get a known representation of each balls RGB value and whiteness. Each balls location was detected by HSB differences between a ball and the felt. Based on the calibration images captured for each ball, the RGB best match of each detected ball was matched to the corresponding calibrated ball. The pattern of a ball, striped vs. solid, was determined by white content of each detected ball. More white meant striped and less meant solid; lots of white meant the cue ball. To determine when to analyze the state of the pool table, an algorithm was written to detect whether a player was shooting and therefore blocking the camera's view of all the balls.

After a day of programming, we did it! The system correctly identified the location of the balls and what balls were left on the table. However, there were some challenges along the way. Lighting, RGB vs. HSB and the USB standard just to name a few. As always, lighting is very important in vision applications. The issue we ran into was shadowing around each ball. The shadowing ruined the RGB color measurement around the edges of the ball which could lead to incorrect ball color detection. HSB is a valuable domain to work in and was useful in distinguishing between the felt of the table and the color of the balls. As far as the USB standard issue, we used a USB webcam for the proof of concept. Although not a devastating issue, we needed to shorten our USB connection to the camera to be under 5 meters since our initial cable was longer and caused camera connection issues.

There are a lot of fun future applications we can do now that we can detect what balls remain on the table. The next major step will be to integrate it with our existing 3/5-way cutthroat application to automatically update what balls are sunk. If you have any fun ideas let me know by posting them below!